|

Normalization—the mainstreaming of people and ideas previously banished from public life for good reason—has become the operative description of a massive societal shift toward something awful. Whether it’s puff pieces on neo-Nazis in major national newspapers or elected leaders who are also documented sexual predators, a good deal of work goes into making the previously unthinkable seem mundane or appealing. I try not to imagine too often where these things might lead, but one previously unthinkable scenario, the openly public mass surveillance apparatus of George Orwell’s 1984 has pretty much arrived, and has been thoroughly normalized and become both mundane and appealing. Networked cameras and microphones are installed throughout millions of homes, and millions of us carry them with us wherever we go. The twist is that we are the ones who installed them. As comic Keith Lowell Jensen remarked on Twitter a few years ago, “What Orwell failed to predict is that we’d buy the cameras ourselves, and that our biggest fear would be that nobody was watching.” By appealing to our basic human need for connection, to vanity, the desire for recognition, and the seemingly instinctual drive for convenience, technology companies have persuaded millions of people to actively surveille themselves and each other. They incessantly gather our data, as Tim Wu shows in The Attention Merchants, and as a byproduct have provided access to our private spaces to government agents and who-knows-who-else. Computers, smartphones, and "smart" devices can nearly all be hacked or commandeered. Former director of national intelligence James Clapper reported as much last year, telling the U.S. Senate that intelligence agencies might make extended use of consumer devices for government surveillance. Webcams and “other internet-connected cameras,” writes Eric Limer at Popular Mechanics, “such as security cams and high-tech baby monitors, are… notoriously insecure.” James Comey and Mark Zuckerberg both cover the cameras on their computers with tape. The problem is far from limited to cameras. “Any device that can respond to voice commands is, by its very nature, listening to you all the time.” Although we are assured that those devices only hear certain trigger words “the microphone is definitely on regardless” and “the extent to which this sort of audio is saved or shared is unclear.” (Recordings on an Amazon Echo are pending use as evidence in a murder trial in Arkansas.) Devices like headphones have even been turned into microphones, Limer notes, which means that speakers could be as well, and "Lipreading software is only getting more and more impressive." I type these words on a Siri-enabled Mac, an iPad lies nearby and an iPhone in my pocket… I won’t deny the appeal—or, for many, the necessity of connectivity. The always-on variety, with multiple devices responsible for controlling greater aspects of our lives may not be justifiable. Nonetheless, 2017 could “finally be the year of the smart home.” Sales of the iPhone X may not meet Apple’s expectations. But that could have more to do with price or poor reviews than with the creepy new facial recognition technology—a feature likely to remain part of later designs, and one that makes users much less likely to cover or otherwise disable their cameras. The thing is, we mostly know this, at least abstractly. Bland bulleted how-to guides make the problem seem so ordinary that it begins not to seem like a serious problem at all. As an indication of how mundane insecure networked technology has become in the consumer market, major publications routinely run articles offering helpful tips on how “stop your smart gadgets from ‘spying’ on you” and “how to keep your smart TV from spying on you.” Your TV may be watching you. Your smartphone may be watching you. Your refrigerator may be watching you. Your thermostat is most definitely watching you. Yes, the situation isn't strictly Orwellian: Oceana's constantly surveilled citizens did not comparison shop, purchase, and customize their own devices voluntarily. (It's not strictly Foucauldian either, but has its close resemblances.) Yet in proper Orwellian doublespeak, "spying" might have a very flexible definition depending on who is on the other end. We might stop "spying" by enabling or disabling certain features, but we might not stop "spying," if you know what I mean. So who is watching? CIA documents released by a certain unsavory organization show that the Agency might be, as the BBC segment at the top reports. As might any number of other interested parties from data-hoarding corporate bots to tech-savvy voyeurs looking to get off on your candid moments. We might assume that someone could have access at any time, even if we use the privacy controls. That so many people have become dependent on their devices, and will increasingly become so in the future, makes the question of what to do about it a trickier proposition. via

0 Comments

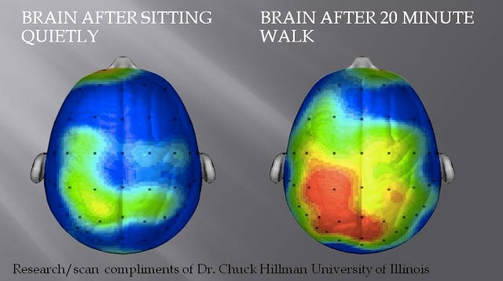

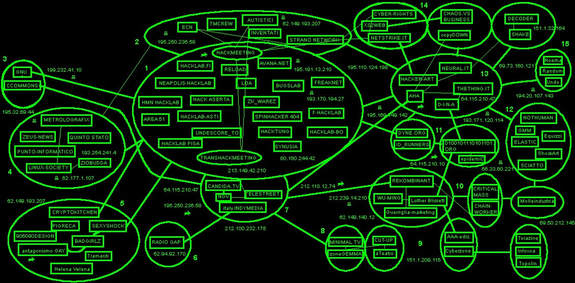

In the United States and the UK, we've seen the emergence of a multibillion-dollar brain training industry, premised on the idea that you can improve your memory, attention and powers of reasoning through the right mental exercises. You've likely seen software companies and web sites that market games designed to increase your cognitive abilities. And if you're part of an older demographic, worried about your aging brain, you've perhaps been inclined to give those brain training programs a try. Whether these programs can deliver on their promises remains an open question--especially seeing that a 2010 scientific study from Cambridge University and the BBC concluded that there's "no evidence to support the widely held belief that the regular use of computerised brain trainers improves general cognitive functioning in healthy participants..." And yet we shouldn't lose hope. A number of other scientific studies suggest that physical exercise--as opposed to mental exercise--can meaningfully improve our cognitive abilities, from childhood through old age. One study led by Charles Hillman, a professor of kinesiology and community health at the University of Illinois, found that children who regularly exercise, writes The New York Times: displayed substantial improvements in ... executive function. They were better at “attentional inhibition,” which is the ability to block out irrelevant information and concentrate on the task at hand ... and had heightened abilities to toggle between cognitive tasks. Tellingly, the children who had attended the most exercise sessions showed the greatest improvements in their cognitive scores. And, hearteningly, exercise seems to confer benefits on adults too. A studyfocusing on older adults already experiencing a mild degree of cognitive impairment found that resistance and aerobic training improved their spatial memory and verbal memory. Another study found that weight training can decrease brain shrinkage, a process that occurs naturally with age. If you're looking to get the gist of how exercise promotes brain health, it comes down to this: Exercise triggers the production of a protein called brain-derived neurotrophic factor, or BDNF, which helps support the growth of existing brain cells and the development of new ones. With age, BDNF levels fall; this decline is one reason brain function deteriorates in the elderly. Certain types of exercise, namely aerobic, are thought to counteract these age-related drops in BDNF and can restore young levels of BDNF in the age brain. That's how The Chicago Tribune summarized the findings of a 1995 study conducted by researchers at the University of California-Irvine. You can get more of the nuts and bolts by reading The Tribune's recent article, The Best Brain Exercise May Be Physical. (Also see Can You Get Smarter?) You're perhaps left wondering what's the right dose of exercise for the brain? And guess what, Gretchen Reynolds, the phys ed columnist for The Times' Well blog, wrote a column on this a few years back. Although the science is still far from conclusive, a study conducted by The University of Kansas Alzheimer’s Disease Center found that small doses of exercise could lead to cognitive improvements. Writes Reynolds, "the encouraging takeaway from the new study ... is that briskly walking for 20 or 25 minutes several times a week — a dose of exercise achievable by almost all of us — may help to keep our brains sharp as the years pass." The article is taken from: by Himanshu Damle On November 28, 2010, Wikileaks, along with the New York Times, Der Spiegel, El Pais, Le Monde, and The Guardian began releasing documents from a leaked cache of 2,51,287 unclassified and classified US diplomatic cables, copied from the closed Department of Defense network SIPrnet. The US government was furious. In the days that followed, different organizations and corporations began distancing themselves from Wikileaks. Amazon WebServices declined to continue hosting Wikileaks’ website, and on December 1, removed its content from its servers. The next day, the public could no longer reach the Wikileaks website at wikileaks.org; Wikileaks’ Domain Name System (DNS) provider, EveryDNS, had dropped the site from its entries on December 2, temporarily making the site inaccessible through its URL. Shortly thereafter, what would be known as the “Banking Blockade” began, with PayPal, PostFinance, Mastercard, Visa, and Bank of America refusing to process online donations to Wikileaks, essentially halting the flow of monetary donations to the organization. Wikileaks’ troubles attracted the attention of anonymous, a loose group of internet denizens, and in particular, a small subgroup known as AnonOps, who had been engaged in a retaliatory distributed denial of service (DDoS) campaign called Operation Payback, targeting the Motion Picture Association of America and other pro-copyright, antipiracy groups since September 2010. A DDoS action is, simply, when a large number of computers attempt to access one website over and over again in a short amount of time, in the hopes of overwhelming the server, rendering it incapable of responding to legitimate requests. Anons, as members of the anonymous subculture are known, were happy to extend Operation Payback’s range of targets to include the forces arrayed against Wikileaks and its public face, Julian Assange. On December 6, they launched their first DDoS action against the website of the Swiss banking service, PostFinance. Over the course of the next 4 days, anonymous and AnonOps would launch DDoS actions against the websites of the Swedish Prosecution Authority, EveryDNS, Senator Joseph Lieberman, Mastercard, two Swedish politicians, Visa, PayPal, and amazon.com, and others, forcing many of the sites to experience at least some amount of downtime. For many in the media and public at large, Anonymous’ December 2010 DDoS campaign was their first exposure to the use of this tactic by activists, and the exact nature of the action was unclear. Was it an activist action, a legitimate act of protest, an act of terrorism, or a criminal act? These DDoS actions – concerted efforts by many individuals to bring down websites by making repeated requests of the websites’ servers in a short amount of time – were covered extensively by the media. This coverage was inconsistent in its characterization but was open to the idea that these actions could be legitimately political in nature. In the eyes of the media and public, Operation Payback opened the door to the potential for civil disobedience and disruptive activism on the internet. But Operation Payback was far from the first use of DDoS as a tool of activism. Rather, DDoS actions have been in use for over two decades, in support of activist campaigns ranging from pro-Zapatistas actions to protests against German immigration policy and trademark enforcement disputes…. taken from: by Paul HANDLEY © GETTY IMAGES NORTH AMERICA/AFP / by Paul HANDLEY | The US National Security Agency, which operates this ultra-secure data collection center in Utah, is one of the key US spying operations turning to artificial intelligence to help make sense of massive amounts of digital data they collect every day. WASHINGTON (AFP) – Swamped by too much raw intel data to sift through, US spy agencies are pinning their hopes on artificial intelligence to crunch billions of digital bits and understand events around the world.Dawn Meyerriecks, the Central Intelligence Agency’s deputy director for technology development, said this week the CIA currently has 137 different AI projects, many of them with developers in Silicon Valley. These range from trying to predict significant future events, by finding correlations in data shifts and other evidence, to having computers tag objects or individuals in video that can draw the attention of intelligence analysts. Officials of other key spy agencies at the Intelligence and National Security Summit in Washington this week, including military intelligence, also said they were seeking AI-based solutions for turning terabytes of digital data coming in daily into trustworthy intelligence that can be used for policy and battlefield action. – Social media focus – AI has widespread functions, from battlefield weapons to the potential to help quickly rebuild computer systems and programs brought down by hacking attacks, as one official described. But a major focus is finding useful patterns in valuable sources like social media. Combing social media for intelligence in itself is not new, said Joseph Gartin, head of the CIA’s Kent School, which teaches intelligence analysis. “What is new is the volume and velocity of collecting social media data,” he said. In that example, artificial intelligence-based computing can pick out key words and names but also find patterns in data and correlations to other events — and continually improve on that pattern finding. AI can “expand the aperture” of an intelligence operation looking for small bits of information that can prove valuable, according to Chris Hurst, the chief operating officer of Stabilitas, which contracts with the US intelligence community on intel analysis. “Human behavior is data and AI is a data model,” he said at the Intelligence Summit. “Where there are patterns we think AI can do a better job.” – Eight million analysts – The volume of data that can be collected increases exponentially with advances in satellite and signals intelligence collection technology. “If we were to attempt to manually exploit the commercial satellite imagery we expect to have over the next 20 years, we would need eight million imagery analysts,” Robert Cardillo, director of the National Geospatial-Intelligence Agency, said in a speech in June. Cardillo said his goal is to automate 75 percent of analysts’ tasks, with a hefty reliance on AI operations that can build on what they learn automatically. Washington’s spies are not the only ones turning to AI for future advantage: Russian President Vladimir Putin declared last week that artificial intelligence is a key for power in the future. “Whoever becomes the leader in this sphere will become the ruler of the world,” he said, according to Russian news agencies. The challenge, US officials said, is gaining trust from the “consumers” of their intelligence product — like policy makers, the White House and top generals — to trust reports that have a significant AI component. “We produce a presidential daily brief. We have to have really, really good evidence for why we reach the conclusions that we do,” said Meyerriecks. “You can’t go to leadership and make a recommendation based on a process that no one understands.” taken from:

One of many things I love about writing—that is, engaging in writing as an activity—is how it facilitates a discovery of connections between otherwise unrelated things. Writing reveals and even relies upon analogies, metaphors, and unexpected similarities: there is resonance between a story in the news and a medieval European folktale, say, or between a photo taken in a war-wrecked city and an 18th-century landscape painting. These sorts of relations might remain dormant or unnoticed until writing brings them to the foreground: previously unconnected topics and themes begin to interact, developing meanings not present in those original subjects on their own.

Wildfires burning in the Arctic might bring to mind infernal images from Paradise Lost or even intimations of an unwritten J.G. Ballard novel, pushing a simple tale of natural disaster to new symbolic heights, something mythic and larger than the story at hand. Learning that U.S. Naval researchers on the Gulf Coast have used the marine slime of a “300-million-year old creature” to develop 21st-century body armor might conjure images from classical mythology or even from H.P. Lovecraft: Neptunian biotech wed with Cthulhoid military terror. In other words, writing means that one thing can be crosswired or brought into contrast with another for the specific purpose of fueling further imaginative connections, new themes to be pulled apart and lengthened, teased out to form plots, characters, and scenes.

In addition, a writer of fiction might stage an otherwise straightforward storyline in an unexpected setting, in order to reveal something new about both. It’s a hard-boiled detective thriller--set on an international space station. It’s a heist film--set at the bottom of the sea. It’s a procedural missing-person mystery--set on a remote military base in Afghanistan.

Thinking like a writer would mean asking why things have happened in this way and not another—in this place and not another—and to see what happens when you begin to switch things around. It’s about strategic recombination. I mention all this after reading a new essay by artist and critic James Bridle about algorithmic content generation as seen in children’s videos on YouTube. The piece is worth reading for yourself, but I wanted to highlight a few things here.

In brief, the essay suggests that an increasingly odd, even nonsensical subcategory of children’s video is emerging on YouTube. The content of these videos, Bridle writes, comes from what he calls “keyword/hashtag association.” That is, popular keyword searches have become a stimulus for producing new videos whose content is reverse-engineered from those searches.

by Steven Craig Hickman

The line that separates idealism from materialism concerns precisely the status of this circle: the “teleological” formula—“ a thing is its own result, it becomes what it always already was”

— Slavoj Zizek

loading...

Australian scientists recently discovered that reality does not exist until you measure it:

If one chooses to believe that the atom really did take a particular path or paths then one has to accept that a future measurement is affecting the atom’s past, said Truscott.

“The atoms did not travel from A to B. It was only when they were measured at the end of the journey that their wave-like or particle-like behavior was brought into existence,” he said.

Associate Professor Andrew Truscott (L) with Ph.D. student Roman Khakimov worked through an experiment using helium atom and laser screens to perform an experiment that up to now had not been possible. The point being that they discovered that quantum information from the future had an effect on the past and could be quantified and measured. Quantum weirdness…

Strangely reading Philip K. Dick’s Letter to Peter Fitting dated 3 June 28, 1974 collected in The Exegesis we come across ideas that would be reappropriated in William Gibson’s latest novel Peripheral which allows transfer of information to and from the future, etc. Dick would make this motif a cornerstone of several novels in his mid-period of the early 70’s, especially such novels as Time Out of Joint, UBIK, The Game Players of Titan and others… Gibson in his new novel allows for characters to be brought to the future via a peripheral (a cyborg avatar that users can connect to from another location) via the quantum server.

Dick will speak of such a notion below:

Dear Peter,

[…] In regards to some of the intellectual, theoretical subjects all of us discussed the day you and your friends were here to visit, I recall in particular my statement to you (which I believe you got on your tape, too) that “the universe is moving backward,” a rather odd statement on the face of it I admit. What I meant by that is something which at the time I could not really express, having had an experience, several in fact, but not having the terms. Now, by having read further, I have some sort of terms, and would like to describe some of my personal experiences using, in a pragmatic way, the concept of tachyons, which are supposed to be particles of cosmic origin which fly faster than light and consequently in a reversed time direction. “They would thus,” Koestler says, “carry information from the future into our present, as light and X rays from distant galaxies carry information from the remote past of the universe into our now and here.” Koestler also points out that according to modern theory the universe is moving from chaos to form; therefore tachyon bombardment would contain information which expressed a greater degree of Gestalt than similar information about the present; it would, thus at this time continuum, seem more living, more animated by a conscious spirit… This would definitely give rise to the idea of purpose, in particular purpose lying in the future. Thus we now have a scientific method of considering the notion of teleology, I think, which is why I am writing you now, to express this, my own sense of final causes, as we discussed that day.1

This notion that beings of the future could control aspects of the past through the use of quantum information or tachyon emissions, etc. doesn’t seem so far fetched now that scientists have shown it to be true at least at the quantum level. Weirdness seems to be the order of the day!

Of course this same notion that Dick mentions in connection to teleology is the very thing Zizek in his use of the concept of “retroactive causality” formulates as follows:

Ruda opposes idealist (Hegel’s) and materialist (Badiou’s) dialectics with regard to the tension between dialectical and non-dialectical aspects of the dialectical process: from the materialist standpoint, the dialectical process “relies upon something that is not itself dialectically deducible”: “materialist dialectics has to be understood as a procedure of unfolding consequences, of the attempt to cope with something that due to the logic of retroactivity logically lies before it, is prior to it.” Ruda is, of course, not only Hegelian enough to recognize that this priority is retroactive (the event is prior to the unfolding of its consequences, but this can be asserted only once these consequences are here); he even goes to the end and proposes the Hegelian formula of the closed teleological loop between the event and its consequences— the event engenders consequences which constitute the actuality of the event, i.e., which retroactively posit their own cause, so that the event is its own cause, or, rather, its own result: the consequences that change the world are engendered by something, the event, which itself is nothing but what it will have generated … an event is the creation of the conditions of the possibility of the consequences of an event— i.e. of the event itself. This is why it had the paradoxical structure of naming a multiplicity which belongs to itself … any event is nothing but the ensemble of the consequences it yields— although it is at the same time the enabling cause of these very consequences.2

This is the notion that the collapse of the future upon the present event retroactively posits the event as a consequence of this future decision; therefore future information collapses upon the past in such a way that the causal system appears teleological (from our standpoint) when in fact it is retroactive (from the future decisional process). What we’re saying is that Time is weirder than we would like to believe… it’s as if from our perspective things, events, etc. have a purpose, a teleology; but, the truth is that it is much weirder: time is not bound to the arrow of some forward, linear movement, but can effect our present moment from the future… it seems time moves both ways: or, maybe time is a field and a force rather than as Kant thought a category internal to the Mind. Either way our conceptions of reality will have to change, because our views of Time are changed by this experiment and its successful implementation. The future has a power over us, a quantitative aspect to it that dictates our realities before we become aware of them as such: we are the result of these selective processes. This changes everything.

Addendum*:

Nick Land asks on Urban Future (2.1): Is there really a difference being noted here? to the statement below:

… the collapse of the future upon the present event retroactively posits the event as a consequence of this future decision; therefore future information collapses upon the past in such a way that the causal system appears teleological (from our standpoint) when in fact it is retroactive (from the future decisional process). What we’re saying is that Time a weirder than we would like to believe … it’s as if from our perspective things, events, etc. have a purpose, a teleology; but, the truth is that it is much weirder: time is not bound to the arrow of some forward, linear movement, but can effect our present moment from the future …

My original thought was actually dealing with the hypothetical particle: tachyonic particle that always moves faster than light coined by Gerald Feinberg. Dick used this notion in several of his novels. Most physicists think that faster-than-light particles cannot exist because they are not consistent with the known laws of physics. If such particles did exist, they could be used to build a tachyonic antitelephone and send signals faster than light, which (according to special relativity) would lead to violations of causality. Potentially consistent theories that allow faster-than-light particles include those that break Lorentz invariance, the symmetry underlying special relativity, so that the speed of light is not a barrier.

According to the current understanding of physics, no such faster-than-light transfer of information is actually possible. For instance, the hypothetical tachyon particles which give the device its name do not exist even theoretically in the standard model of particle physics, due to tachyon condensation, and there is no experimental evidence that suggests that they might exist. The problem of detecting tachyons via causal contradictions was treated scientifically. Yet, as we know tachyons are hypothetical entities that have never been proven to exist. It’s in this second sense that Gibson uses a similar device to allow for informatical time-travel through the use of a quantum server into the Peripherals, since light is in this sense the time-barrier beyond which classical physics cannot be breached; but at quantum levels of abstraction it can. This is the time anomaly-paradox that in the experiment conducted showing us that classical physics has now been breached using macro-technologies upon quantum processes. All that the scientists in the new test prove is that if one chooses to believe that the atom really did take a particular path or paths then one has to accept that a future measurement is affecting the atom’s past…. If this is true then the paradox that Einstein and Arnold Sommerfeld presented in their thought-experiment in 1910 of how faster-than-light signals can lead to a paradox of causality has been confirmed in the experiment. Obviously it will need to be duplicated in other labs, etc. And there will be discussions on exactly how to interpret the facts of the case. This was just one suggested path in reasoning, not something set in stone.

1. Dick, Philip K. (2011-11-08). The Exegesis of Philip K. Dick (Kindle Locations 367-377). Houghton Mifflin Harcourt. Kindle Edition.

2. Zizek, Slavoj (2014-10-07). Absolute Recoil: Towards A New Foundation Of Dialectical Materialism (pp. 72-73). Verso Books. Kindle Edition.

taken from:

|

Science&TechnologyArchives

March 2020

|

RSS Feed

RSS Feed