|

by Alexander Galloway

It began with a list. When addressing “the birth of a new medium,” Janet Murray responded with a list of properties. Digital environments have four essential properties, she argued. Digital environments are procedural, participatory, spatial, and encyclopedic.

When tasked with the definition of “new media” a few years later, Lev Manovich answered in a similar way. “We may begin…by listing,” he claimed, before issuing a stream of empirical references: “the Internet, Web sites, computer multimedia, computer games, CD-ROMs and DVD, virtual reality.” Yet Manovich’s primary litany was but prelude for another one, the second list more important for him, a series of five “principles” or “general tendencies” for new media: numerical representation, modularity, automation, variability, and transcoding. And the fourth principle (variability) was itself so internally variable that it required its own sub-list enumerating no less than seven “particular cases of the variability principle.” Several years later, in their statement on “platform studies,” Nick Montfort and Ian Bogost devised a list of their own. Analyses of digital media typically attend to “five levels,” they observed, from the level of reception and operation, to the interface, to questions of form and composition, to the software code, and finally the hardware platform. These five levels correspond somewhat incongruously to the six layers in Benjamin Bratton’s “stack,” whereas the official OSI reference model enumerates no less than seven layers. In a 2008 lecture on “Software Studies” Warren Sack offered six characteristics, which, incidentally, differ from the six characteristics described by the editors of New Media: A Critical Introduction. No doubt I have my own lists as well. No doubt they are also different. Shall we not pause to compile a list of all these lists, collecting and categorizing the various attempts to define digital media via denoting its qualities and characteristics? Is it four or five, why not six or seven, and do any of these properties, layers, and qualities correlate? So many characteristics, arranged through so many aspects. So many adjectives. Why all these lists? From one point of view, the lists make perfect sense. Computers are list processors, after all, as LISP programmers are keen to mention. Yet from another point of view, the recourse to listing indicates a fundamental mismatch between the method of analysis and the object under scrutiny. Even as the world is scanned and digitized, the types of things listed — properties, characteristics, and qualities — are themselves indexes for extra-digital phenomena. A quality is precisely the thing that hasn’t yet been converted into discrete code. A characteristic refers to some detail, empirical or practical, that helps explain this particular thing, as opposed to the general abstract class in which it belongs. And properties are similar to characteristics; properties are something like “free variables” that fill out the particular instantiation of an entity. For this reason, software applications will frequently “externalize” properties, housing them in non-executable preference files, classifying them essentially as constants. So many adjectives–the trouble being that computer languages have plenty of nouns and verbs but very few adjectives. My point is that we’ve exited the digital. The list is an analog method. And I mean lists not sets or arrays. The list represents a collection of things that don’t go together — even when placed in apposition — and that can’t, in principle, be reduced to a single concept that would subsume them. The list resembles the category or the type, but only superficially — as in Borges’ notorious fabulation, those animals “(a) belonging to the Emperor, (b) embalmed, (c) tame, (d) sucking pigs, (e) sirens, (f) fabulous, (g) stray dogs…” — proving instead the failure of the category, the insufficiency of a symbolic order that can only be approximated through a series of depictions. (This version of the list, recounted at the start of Michel Foucault’s The Order of Things, is the object of some consternation regarding its explicit orientalism. Indeed there is something orientalist about all lists.) Likewise properties, characteristics, and qualities are analog technologies. They are numbers that refer to things that are not numbers. A quality can be digitized, of course. But in its essence, a quality remains stubbornly within the realm of analog experience. Recall Agamben’s discussion of the example. Whenever you see someone raise an example — or equally a counter-example — you know you are neck deep in analog territory. Why? Because the example is the thing that cannot be accounted for by the symbol, the name, or the rule. One “appeals” to the example, just as one appeals to experience. By contrast the digital is the regime in which the example is irrelevant–which is maybe why I’m frequently confused when empiricists try to formulate digital theory. In sum, if you are working on the digital, ask yourself… Am I describing lists of qualities? Am I drafting litanies of examples? If you answer yes, you are likely working on an analog mirror of the digital, with the digital itself still lost elsewhere, as yet un-analyzed.

loading...

taken from:

loading...

0 Comments

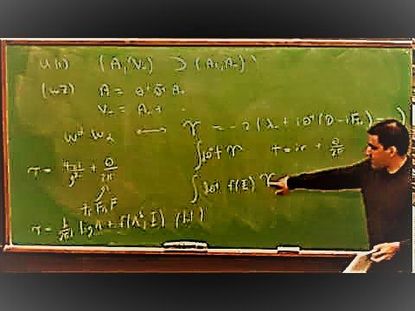

by Achim Szepanski In antiquity, the word “Dia-graphein” referred to the inscription of a line, namely in geometric tables, lists, musical notations, etc. Not only were plans and figures designed with the help of lines, but there were also numerous markings and even crossings, which then called into question the stability of the respective diagram, but sometimes in the bud contained drafts for further diagrams. Neither mathematics nor music are to be understood as languages; rather, they contain diagrams that should be analysed not from the point of view of representation but of action/production, when they become operative in order to temporalise or rhythmise thinking and its speakers.1 Today, diagrams are an important integrative part of the mechanized information and knowledge production of capital, whereby one must define them less as representation media, but rather as stored states of abstract calculating machines that are always on the verge of becoming operative. (Cf. Miyazaki 2013: 30) Examples of diagrams include algorithms and algebra, logic and topology, plans and schemes of data storage, circuits and tables, charts and graphs of the economy, but finally also the scores of music. Usually in the sciences, two positions can be named with regard to the definition of the diagram: 1) The diagram as an entity, usually represented in graphic form, which serves to solve a problem or as a tool for systematization, presenting a certain number of conclusions that are perceptually oriented. 2) The diagram as a resolution-oriented cartography or as the multiplier of a productive unfolding process. While the first definition is intended to illustrate the visual diagram that iconicizes a relation in terms of its form and potency, the second definition favors the production of relations, that is, the design and its links, the solutions and resolutions, generally the temporal process, or as Miyazaki puts it, the “algorhythm” of events. (Ibid.: 36) In the second definition, one thus clearly emphasizes the processual arrangement of signs, objects and schemes, and in the post-digital age, universal streaming is certainly to be added here, whereby here the diagram in the black box remains mostly invisible. While the first diagram concept prefers the retrospective components of the summary of facts, the second definition emphasizes the projective components, insofar as specific time-vectors with the generation of trajectories refer to directions to be captured in the future and thus open up a field for action. If one also emphasizes the concept of operationality, one must point to the hybrid term “algorhythm” brought into play by Miyazaki, whose medium is the numbers, which are much clearer than letters and sentences. Diagrams, if they are stored in calculating machines, integrate sequential, time-measurable and physical-real effects of the algorithmically programmed symbolic logics. (ibid.) In doing so, the diagrams execute “algorithms” which are responsible for generating patterns and which constantly generate new patterns in open processes. Circuits, plans, scores or tables, when they become operative or act in a temporalized manner, are transformed in specific structures into rhythms, which in turn are implemented in real machines. (Ibid.) From the outset, diagrams require a relational logic (the relations are secondary to the relations), which serves to place non-qualified relations and forces in a processual relationship.2 In contrast to Peirce’s “icon of the relationship”, Guattari conceives the diagram as a “conjunction of matter and semiotics”, whereby he places the emphasis from the outset on the relation and not on the icon or the similarity of the diagram to the object. Guattari even excludes the pictorial nature of the diagram at the abstract level, in so far as he thinks of the diagram from its unfinished material-semiotic, functional and relational consistency, which always unfolds in quite different alterity registers, a universe of virtualities and its actualizations. (Guattari 2014: 61) Guattari assumes that diagrams create a conjunction between deterritorialized signs and deterritorialized objects and thus exert a direct effect on things, insofar as they involve a material technology and are part of a complex manipulation of (abstract) sign machines. According to a definition by Guattari, “abstract machines form a kind of material of change – I call it ‘optional matter’ – that is made of possibilities of the material which catalyse connections, de-stratifications and reterritorialisations in the world of the living as well as that of the inanimate” (Guattari 1979: 13). Guattari speaks here of the optionality of matter, while together with Deleuze, in Thousand Plateaus, he emphasizes much more strongly the operationality of the diagram itself and, subsequently, its action in the material, when it is at the level of the abstract machine, that is, beyond the distinction between expression (linguistics, semiotics and meaningful performance) and structure (matter, body, signal), making the diagram itself part of a pure matter function. Guattari ties his concept of the diagram closely to socio-economic conditions and the (digital) machines that correlate with them. These machines contain heterogeneous and dynamized elements (materialization of practices) that are absolutely necessary for the production, construction or transduction of economic processes today: plans, schemes and notations. What distinguishes the a-significant semiotics, their diagrammatics and their configurations, is the combination of abstraction and materiality. Medial machines are thus to be understood as the results of material-semiotic-discursive practices that are condensed in scientific apparatuses and institutions, where they lead to branching, interconnections and cuts in the material, which only leads to the folding of certain objects (and subjects) by drawing certain boundaries and thus dissolving ontic-discursive indeterminacy. (Cf. Barad 2012) The diagram again refers to the concept of agency, which according to Deleuze/Guattari consists on the one hand of utterances, on the other of machines, of what can be said and what can be done. In this context, the technological terms “branching pattern” and “interconnection instance” indicate not only the functional and non-subjective potentiality of the diagrammatic, but also the moment of execution, the operative and the rhythmic. The often graphically oriented representation of the diagram is thus already clearly conceived here in terms of execution. In his book L’inconscient machinique, Guattari directly addresses the productive achievements of diagrammatization when he writes that “the figures of expression involved in a diagrammatic process no longer aim to represent meanings or elements of meaning. The systems of algorithmic, algebraic and topological logic, the procedures of recording, storage and information processing used by mathematics, science, technology, harmonic and polyphonic music, do not aim to designate or imagine the morpheme of a referent that is already given, but to produce it qua their own machinicity”. (Guattari 1979: 224) The referent of the semi-machine is thus not extinguished, as Jean Baudrillard, for instance, still assumes (Baudrillard 1982), but is generated in nuce by the diagrammatic systems.3 A relational diagram concept, which always also emphasizes the power relations inherent in a socio-economic formation, aims to describe processes in which power relations are continuously at work. Power here includes a “will to power pluralism”, which encompasses the multiplicity of forces and plural power quanta. And Deleuze emphasizes that there is no diagram that does not release relatively free or decoupled points on the side of the points it links, points of invention, resistance and the possibility of a new distribution. At the same time, the diagram includes the distribution of the abilities to affect and the abilities to be affected. It is evident that the organization of economic, technological and political machines, indeed of all forms of social life, is no longer conceivable today without algorithms. The new technological nomos, however, remains heterogeneously folded in as far as it functions and acts within the interplay of imperial national governments, transnational organizations (IMF, WTO, ECB, NGOs), transnational banks, hedge funds, corporations and companies like Google, Facebook, Apple, Amazon, etc. Within the framework of the quasi-transcendental context of total capital (the uneventfulness of transcendentalism can only be historical in the sense that it is shown when and where it became a historical event) and virtualization-updating circuits, algorithms serve to substantiate the differential patterns of a consensual world economy marked by the respective national and international accumulation, competition and conflict potentials. What a few years ago was still called a computer is today too shrunk to a tiny yet complex structure of microcircuits made of silicon, which can be integrated into intelligent objects, devices, gadgets, walls or even the human body, and is thus part of a technological environment that attempts to utilize capital as its constantly fluctuating digital agency and simultaneously apply it as a power technology. Digital media technologies have thus long since created intelligent environments, servomechanisms of cybernetic networks with which workplaces, private and public spaces, offices and buildings are designed and interconnected as complex communicative con-figurations, with multimedia nervous systems and communication machines connecting everything that can potentially be connected. Apple’s “Health Kit” links communication devices to various fitness equipment, while Apple’s “Home Kit” links them to the various devices in the apartments.4 Digital automation today is electro-computational, constantly generating simulations of feedback and other autonomous processes in a wide range of social settings. (Cf. Terranova 2014: 127) It unfolds in various networks consisting of electronic and neural connections, in which the employees as well as the users are integrated as quasi-automatic relay stations in a continuous flow of information from machines that process at different rhythms. In this process, digital automation mobilizes the production of the soul among the actors, involving the nervous system and the brain. It is in these agencies or networks that the algorithms must be located if new models of automation are to be discussed. One should therefore understand the algorithms as parts of different agencies, which include material structures (hardware, data, databases, memory etc.) as well as sign systems and statements and, beyond that, the behaviour and actions of persons. Modern algorithms have to process ever increasing amounts of data, they encounter growing entropy in the data stream itself (Big Data), where potentially infinite amounts of data and information interfere with algorithmic processes until they can even produce “alien” rules when combined. Thus, algorithms neither comprise homogeneous sets of techniques nor guarantee the trouble-free execution of an automatic process of control. Usually, the algorithm is understood as a method of solving a task by means of a finite sequence of certain instructions (step-by-step instructions); it is a set of ordered steps and commands that precisely describe the solution of a problem, operating with data and numerical structures. In contrast to mathematical formulas, we always speak of an algorithm, which represents a calculation instruction, as a process (description of the sequence of the respective calculation steps). Such a written formalization must fulfill the following functions: Storage, operation, schematization and communicability, or in other words, it must be coded (repeatable and symbolically noteable). It must not allow any interpretations, it is therefore unambiguous. On the one hand, the algorithm is regarded as a set of finite instructions (first-order-cybernetics), i.e. finite algorithms generate finite complex structures. On the other hand, the algorithm is also capable of adaptation and variation when it refers to external stimulation and constantly changing circumstances in the environment (second-order-cybernetics). On the meta-level, one can now assume that the first, the problem-oriented approach, favours an abstract concept, i.e. the algorithm contains an abstraction that has an autonomous existence, independent of the “implementation details”, the execution in particular program languages, which themselves are part of a particular machinic architecture. Algorithms are thus to be understood in the technomathematical sense as abstract thought structures, which contain a finite sequence of unambiguously determined instructions, which describe the path of the solution of a problem exactly and completely. In contrast, the machine-oriented variant emphasizes algorithmization more strongly than a practical process, i.e. algorithms in the sense of practical computer science comprise abstract-symbolic control and steering structures, which are usually noted as pseudo-codes or flowcharts – special forms of mathematical constructs. Their symbolically unique coding is here already completely designed for execution. And this is what distinguishes an algorithm from an algebraically notated mathematical formula. In the abstract model of the Turing machine, the efficiency of an algorithm does not yet play a role, as unlimited storage is assumed here, which is physically impossible. With Gödel it became clear that mathematics or an axiomatic method cannot succeed in verifying a system and its propositions, even if they were all true, exclusively immanently and recursively completely, so that special systems for solving problems of certain classes became more and more important, i.e. algorithmic-practical procedures, which in the end simply have to work. The incompleteness of the method also indicates the incompleteness of the calculation. Thus, algorithms have ever had to be examined for their temporal efficiency and complexity, two factors that are inversely related to each other: In addition to the memory complexity, the time complexity must be taken into account, with which the problem of the addition of the calculation steps in relation to the symbol length of the input arises. A rhythm is integrated into the respective running time calculation (O-notation) of an algorithm, whereby the latter determines the rhythm. The rhythm of the algorithm running in time is thus to be described in terms of its runtime, whereby the efficiency of the algorithm, the compiler and the executing hardware plays a role here. And the predestined symbolized sequences of iterations (outputs) can in turn be used as inputs of the same algorithm and thus influence the course of further sequences: A distinction is made between predeterminated iterative, recursive, and recursively conditioned algorithms.5 (Cf. Miyazaki 2013) Algorhythm”, a neologism invented by Shintaro Miyazaki and created by mixing the terms algorithm and rhythm, is based on the writing down of symbolic instructions or codes stored in sequential form in diagrams, which in turn are implemented in real machines where they generate certain rhythmic patterns. (Ibid: 37) The notation of time, which Miyazaki relates to the clock and ultimately to media studies as a whole, can be translated more easily into specific automata with the help of the notations of music than those of language, because music has a technomathematical under-complexity compared to those of language. According to Miyazaki, the component of time and the musical-sound aspects should be essential for understanding the media from the outset. With the invention of the telephone, the media technologies of music and speech as an electrical signal finally coincided. (ibid.) At the same time, machines as automatons, within the framework of their self-activity, increasingly decouple themselves from any external influences, whereby the cybernetic automaton here represents only a very special, differentiated arrangement. (cf. Bahr 1983: 442) Self-activity must therefore be regarded as a decisive reference of the automaton. “Algorhythms” indicate that the digital machines and their processes are divided into time units. They oscillate in the in-between of the continuous and the discrete, thus including a “disruptive flow” in the media machines of storage, transmission and processing; a flow that brings Miyazaki close to Derrida’s “différance”, which is here processed by algorhythmic machines and agencies, in order to ultimately generate not only new modes of perception, forms of knowledge and habitats, but also potencies and fields of possibility that open up both disturbances and innovations in the field of media technologies. As rhythms of the technical unconscious, the algorithms generate a trans-sonic cocoon, a cocoon that is mostly inaudible to the human ear, within which today mobile smartphones (mobility of the Internet) function as the most important receiving stations. However, these trans-sonic processes can be transformed into audible rhythms by means of various technical procedures. And algorithms are integrated in calm technologies, i.e. in interconnections, dynamic agents and swarms, so that we have long since had to speak of an ecology of machines, in which digital objects are increasingly liquefied and transformed into logistical processes (streaming), whereby various rhythms and potentials serve to make specific purposes more effective and optimise them. And signal-based agencies (RFID) not only mark the respective objects with digital indices, but they also index their locations, so that the spaces are synthesized with the symbolic and can be controlled technomathematically (monitoring). Writing and cabling systems are each specifically synthesized in order to optimize the storage and transmission and subsequently the calculation and control of information. The computer with which the codes are written cannot do without real-physical operations in time, and this refers to the time problem of algorithms, and finally the algorithm of these machines of knowledge. The industrial Internet will only become effective when three essential components – algorithmic devices, algorithmic systems, and algorithmic decision-making processes – come into contact with the physical machines, infrastructures, networks, and fleets. One could say with Miyazaki that algorithms are ek-sistent as physically measurable, as polymorphic structures, because in contrast to the purely symbolic beat, rhythm as operativity, performativity, or materiality has ever been coupled with reality. Thus the algorhythmic oscillates between the symbolic and reality by modulating the gap, the gap, the crack between the material and the immaterial with its time-serially rhythmic short circuits. Thus, the digital should long since no longer be understood as something purely object-like and/or purely static, but one should especially follow its respective divisions, multiplications and trajectories, if today digital scripts generate specific loops and fields, complex forms of streaming. When we think of streaming, we think first of all of the screen surfaces, then of the shift between static desktop computers and mobile laptops and notebooks and table-based devices. (One can classify the smartphone/mobile phone as private, the tablet as semi-public and television as public). But there is another streaming or processing going on behind the screen surfaces in the various black boxes of the computer, a movement and trajectory, series of lines that companies like Twitter follow in a very specific way, calculating and evaluating these processes and making them accessible for monetary exploitation. Thus, the singular human-machine interface is increasingly losing its importance compared to the interface of non-human agents and human structures. Algorithms, as part of the intrinsic value form of capital, cannot be exploited per se, but only if they are accessible to monetary capitalization as part of fixed capital, and this today in the context of an accelerating accumulation of capital in the area of oligopolies on the Internet. From an economic point of view, therefore, they are to be understood as a form of fixed capital which, as an integrative and constituent part of the production process, contributes to the production of machine added value. To the extent that they constitute fixed and at the same time measuring capital, algorithms are partly responsible for the fact that human labor capacity is an infinitesimal, increasingly disappearing quantity in certain areas of production in which the aim is to optimize the process itself along an a-linear, machine line. Here the algorithm forms a derivative, metric measure that evaluates productivity, process and speculation with the endless line, which runs in spirals and feedback loops, far from still engaging in the stasis of the product, instead continuously working the lines and relations between production, circulation and consumption. (Raunig 2015: 171f.) At the same time, algorithms encode a certain quantity of social knowledge that is extracted from the work of scientists, especially mathematicians and programmers, and from various user activities. Deleuze/Guattari write in Anti-Oedipus that the codes in science and technology deterritorialized by capital generate a liquefied machine surplus value that supplements the surplus value production qua living labor, so that living surplus value and machine surplus value result in the wholeness of a flow. (Deleuze/Guattari 1974: 298) At this point Matteo Pasquinelli distinguishes between two forms of algorithms, namely algorithms that translate information into information and algorithms that accumulate information and extract metadata from the respective collections. (Cf. Pasquinelli 2011) The economic importance of metadata, similar to that of derivatives, lies in their function of acting as monetary capital and, at the same time, in their governance function, which in the financial industry is used to spread, trigger and normalize risks. In relation to the normalization of risks, metadata can in some ways perform a valuation function, and at the same time they must be realized in money, as the measure of all commodities. The massive accumulation of information and the extraction of metadata, which is performed daily in the global digital networks, indicates new complex fields of exploitation, now known as “Big Data”. 1 For example, it has been attested that the US particle physicist Richard Feynman, with his diagrams depicting the For the representation of the electron and photon movement, the analysis of which actually requires an abstract mathematical instrument, a first-rate didactic achievement in terms of representation has been achieved. However, his diagrams can also be retranslated into differential equations and fed into simulative models that emphasize the production aspect more strongly. 2 For Deleuze/Guattari, the revolutionary diagram implies an a-structural process of differentiation, which is no longer unified through binarity or totalization, but functions through very mobile procedures of modulation and variation, which are directed against the fixed power structures and diagrams. 3 Mathematics works in operative relation to the models of physics. With the generation of complex models, mathematics itself still invents the particles, it thus produces an artificial nature, which is then possibly confirmed in experiments. 4 It should be noted here that Apple, with its ramified strategy of soaking up government innovations in order to use them in such a way that the content (film, music, apps, addresses) is accessible primarily on its own product (iPod,iPhone, iPad), increases the opportunity costs for the customer when buying a device, for example from the competition of Nokia or Samsung. 5 A processor is a machine that is designed to execute algorithms in time in a structure that is understandable to the machine. Barad, Karen (2012): Agential Realism: On the importance of material-discursive practices. Berlin.